As technology grows, the way we create data becomes more exciting. From social media posts to online transactions, all the activities performed by a user leaves data. This large amount of data is also known as Big Data, which carries a huge number of possibilities, accessing that data is quite challenging. Traditional methods of storing and processing data are quite slow, they lack in maintaining the speed and scale of modern data.

This is where in-memory computing technology comes in. It processes data at a very fast speed, without any delay caused by reading from traditional disk storage. Since its introduction, this has been a game-changer, most notably in the world of Big Data. Let's learn how it works and why it is powerful for Big Data applications.

What is In-Memory Computing (IMC)?

In-memory computing (IMC) is a way to store data in RAM memory. In the traditional methods data was stored on hard disk or SSD. As RAM is faster than disk storage (HD and SSD), it has access to read and write the data immediately. By processing this process, data is directly processed in the RAM. This way it reduces the time to move the data from disk to memory before processing.

In a traditional disk-based system, when data needs to be accessed or processed, it takes quite a while to fetch it from the hard drive. On the contrary, in-memory systems can access or manipulate data much faster since all has been loaded into memory already. Such fast speed for accessing data is required as we need to deal with large amounts of data.

Impact of In-Memory Computing on Big Data

Big Data are datasets that are too large or complex for traditional data-processing software to handle efficiently. The time for processing such huge datasets could run into hours, or even days. This is where this technology can make a big difference. It allows us to store the data in memory for real-time analysis, faster processing, and more efficient use of resources.

In-memory computing is scalable and offers high performance. Data keeps on growing, and hence, the in-memory technology allows for horizontal scaling across many servers to handle the biggest datasets within seconds. The feature of scalability places it on the top of business choices when handling Big Data applications.

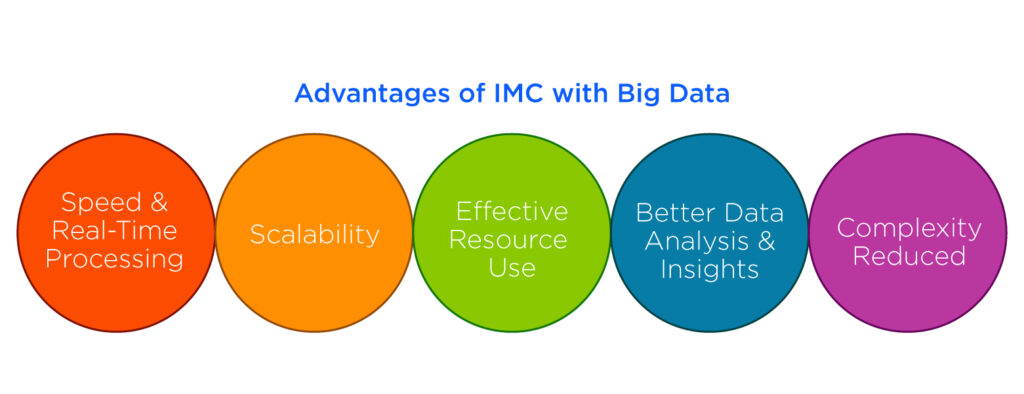

Advantages of IMC with Big Data

Speed and Real-Time Processing

The first and foremost advantage is its speed. Anything about Big Data is about speed. Traditional methods, which rely more on slower disk storage, introduce latency in data processing. IMC removes this bottleneck, enabling real-time data processing. These are the leading industries such as finance, healthcare, and e-commerce where decisions that depend on real-time data make all the difference. For instance, real-time data analysis can assist traders in the stock market in making split-second decisions.

Scalability

Big Data grows continuously. As companies gather more and more data, the systems they use need to scale. IMC technologies are designed to scale horizontally. This means that if more memory or computing power is needed, additional servers or resources can be added seamlessly. Unlike traditional systems, which might struggle to keep up with the growing data load, in-memory systems can expand easily to handle vast amounts of data.

Effective Resource Use

Since in-memory systems store the data in RAM, complex data retrieval from disk is minimized as much as possible. It brings efficiency to the overall system. Data-intensive tasks, such as complex calculations, or running machine learning algorithms become faster and with minimal pressure on resources. To a business organization, this implies less operational costs and better performance, especially during high hours of data flow.

Better Data Analysis and Insights

In-memory computing offers Big Data analytics faster with higher efficiency. Since it can process data in real time, businesses can gain greater insights almost instantly. This enables businesses to react faster to new market conditions, customer behavior, or other factors. For example, in the e-commerce industry, it allows the tracking of user behavior in real-time and provides users with instant recommendations.

Complexity Reduced

Managing large datasets in traditional systems becomes complex and difficult to manage. IMC makes this easier by cutting down on slow disk I/O operations. This allows developers to focus more on creating innovative solutions instead of optimizing the data storage and the process of retrieval.

Evaluating the Use Cases of IMC in Big Data

Financial Services

In finance, every millisecond matters. Stock traders rely on real-time data to make quick decisions. In-memory computing enables them to process and analyze large amounts of financial data instantly, helping them spot trends, predict market movements, and make smarter investment choices.

Healthcare

Patient data is important in healthcare facilities. Medical professionals use IMC to quickly access patient records, analyze real-time medical data, and also, they run predictive analytics for personalized care. Hospitals can also optimize the use of available resources such as critical equipment and staff.

Retail

Online retailers require personalization of customer experiences. In-memory computing helps them to track customer behavior in real time and offers personalized recommendations or discounts based on browsing patterns. During busy times such as Black Friday or Cyber Monday, in-memory systems ensure that the website runs smoothly even if there is a large number of users.

Telecommunications

Telecom companies deal with an enormous amount of data, everything from call logs to internet usage. By using IMC this data is processed quickly. This improves customer service and offers real-time analytics for billing, network optimization, and fraud detection.

How IMC Will Transform Big Data?

The role of in-memory computing in Big Data will continue to evolve and it will become more important as data increases. One of the biggest changes we can expect is better use of Artificial Intelligence (AI) and Machine Learning (ML). We need to train and deploy AI and ML models much faster because IMC delivers fast processing of data in vast amounts, making the system smarter.

For instance, for industries like autonomous vehicles or personalized medicine, real-time processing of data will enhance the accuracy of decision and prediction.

Another big change is the use of edge computing, where Internet of Things (IoT) devices will create more data at the "edge" of networks. This will allow in-memory computing to process data closer to its source, reducing delays and improving response times. This shift will benefit applications in autonomous systems, smart cities, and connected healthcare, where fast data processing is essential.

Additionally, quantum computing could change in-memory computing. Though it is still new, it has the ability to solve complex problems much faster than today’s systems or technology. By combining IMC with quantum computing, businesses may be able to tackle Big Data problems at an unimaginable scale and speed.

Looking Ahead!

In-memory computing technologies have dramatically changed the way businesses handle Big Data. The ability to process and analyze data in real-time has made these systems essential for industries relying on speed, efficiency, and scalability. Whether it is offering better customer experiences, making quicker business decisions, or analyzing vast datasets, IMC provides businesses with the tools needed to stay competitive in this digital age.

With the rise of data and emerging technologies, the future of in-memory computing looks promising. By unlocking the power of IMC, companies can exploit Big Data to make faster decisions, be smarter, and truly innovate and grow in years ahead.

Read more about big data at SecureITWorld!

Frequently Asked Questions:

Q. What does in-memory computing mean?

A. In-memory computing refers to storing data in a computer's RAM instead of on traditional disk drives. This allows access to data and processing it in a faster way.

Q. What is the use of Big Data in technology?

A. Big Data helps businesses analyze large volumes of information to discover new trends. It makes better decisions and improves customer experiences. It drives innovations in various fields like healthcare, finance, marketing, and more.

Q. What is the fastest way to process Big Data?

A. To process Big Data in a faster way, one must use distributed computing frameworks like Apache Hadoop or Apache Spark, which allow data to be processed across multiple servers simultaneously.

Recommended For You:

Data Protection for Small Business: Easy Steps to Take

AI Data Cloud Explained: The Role and Benefits of AI in Cloud Computing